S3 Batch Operations is a simple solution from AWS to perform large-scale storage management actions like copying of objects, tagging of objects, changing access controls, etc. It makes working with a large number of S3 objects easier and faster.

S3 Batch Operations can be used to perform the below tasks:

- Copy objects to the required destination

- Replace all object tags

- Delete all object tags

- Replace Acess Control list (ACL)

- Restore archived objects

- Enable Object Lock

- Enable Object Lock legal hold

- Invoke AWS Lambda function to perform complex data processing

In this article, we will look at how to create object tags using S3 Batch Operations. We will generate an inventory report for a test S3 bucket, create and run the S3 Batch Job to create tags, and use the newly tagged object in the lifecycle policy.

Let’s get started!

Setup:

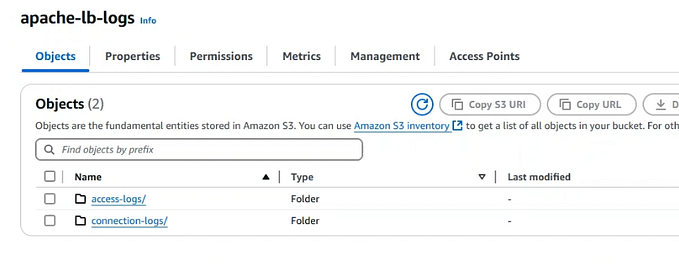

To begin with, create a test bucket and upload few objects.

Note that, all the uploaded objects do not have any tags attached to them.

Inventory Setup:

AWS S3 provides automated inventory, providing visibility of S3 objects which would otherwise be very tedious when dealing with millions of objects.

Let’s set up inventory on the S3 bucket to pull the required info about the S3 objects. Go to the ‘Management’ section and ‘Inventory configurations’ and click on ‘Create inventory configuration’.

Enter the inventory name and choose the scope of inventory creation.

Under ‘Report details’, enter the destination bucket for pushing the generated inventory reports. An S3 bucket policy will automatically be created and applied to the destination bucket.

Choose the frequency, format, and encryption in which the inventory reports have to be generated.

Choose any additional fields as required and create the inventory.

The first inventory report will take up to 48 hrs to generate and will be published in the destination provided. Folders with dates in the name will contain manifest files and a resultant inventory list under the data folder.

manifest.json contains details of all S3 object details that satisfy the condition for the current inventory report. ‘fileSchema’ contains all the object properties that are collected in the inventory report. ‘files’ provides the path for the resultant inventory list.

manifest.checksum file is the MD5 content of the manifest.json file created to ensure integrity.

The data folder contains the CSV inventory files which are generated based on the frequency set in inventory configuration.

Inventory is now ready to be configured with S3 batch operations.

IAM Role Creation:

Next up, an IAM Role is required, that grants access to S3 Batch Operations on the S3 bucket to perform required actions.

Create an IAM policy with the below JSON after updating the name of your S3 bucket. This link provides additional info on permissions required for different operations.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:PutObject",

"s3:PutObjectAcl",

"s3:PutObjectTagging"

],

"Effect": "Allow",

"Resource": "arn:aws:s3:::s3-batch-trial-test/*"

},

{

"Action": [

"s3:GetObject",

"s3:GetObjectAcl",

"s3:GetObjectTagging",

"s3:ListBucket"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::s3-batch-trial-test",

"arn:aws:s3:::s3-batch-trial-test/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectVersion"

],

"Resource": [

"arn:aws:s3:::s3-batch-trial-test/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::s3-batch-trial-test/*"

]

}

]

}Create an IAM role with any AWS service and attach the IAM policy created in the previous step. Update the trust relationship of the role to trust S3 batch operations.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "batchoperations.s3.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

We have all the necessary items checked to proceed to setup our first S3 batch operations job.

Setting up batch job:

S3 Batch Operations can be accessed via the S3 console on the left-hand pane. Click on ‘Create job’ to start congiuring.

Choose the region for setting up the job. Select the path of inventory manifest.json.

The other option is to directly import the CSV file which contains the object details on which you want to perform the batch operation. CSV file must contain bucket name, object name (and object version in case of versioned files).

Next, choose the operation you want to perform. For this article, let’s try replacing the object tags. Enter the tag name that must be added or updated.

Next, proceed to configure additional properties. Enter the Description and set a job Priority. Choose an S3 path to store the logs of batch jobs.

Choose the IAM role created in previous section from the dropdown.

Review the configuration and proceed to create the job.

Once the job is successfully created, status will be set to ‘Awaiting your confirmation to run’. In case of any failures to create the job, check the job report file stored in the path provided earlier, fix the error and clone the job to proceed with previous configuration.

Select the job and click on ‘Run job’. Review the settings and run it.

Status of the job changes to Ready > Active > Completed.

Let’s check the properties of the object to see if the tags are added and here we go!

We can now use the newly tagged object as filters in lifecycle policy.

Let me give you an actual example of use of S3 batch operations. We had to set lifecycle policies across all buckets that would transition S3 objects to Glacier, 90 days after their creation. In one of the cases, we had to copy S3 objects from one bucket to another, which made S3 objects lose their original ‘last modified date’. As a result of this, lifecycle policies that were required to transition objects to Glacier did not run on the destination bucket, even though the objects were older than 90 days. This led to increased S3 cost. S3 Batch Operations was then used to re-tag the objects and then transition them to the correct storage class, using lifecycle policies.

In summary, S3 batch operations can be used to perform otherwise tedious S3 operational tasks easily with very few simple steps saving lot of time and efforts!